Moving into a New Era of Robotics Tooling with Multimodal Data Observability

In the best-case scenario, robotics development is a massive challenge: it requires committed collaboration between multiple teams, pushes the boundaries of engineering and physics, and calls for highly precise alignment between hardware and software.

And that’s in the best-case scenario — now imagine building a robot in a world without multimodal observability! Luckily, new tools on the market give robotics developers access to off-the-shelf tooling designed specifically for developing robots.

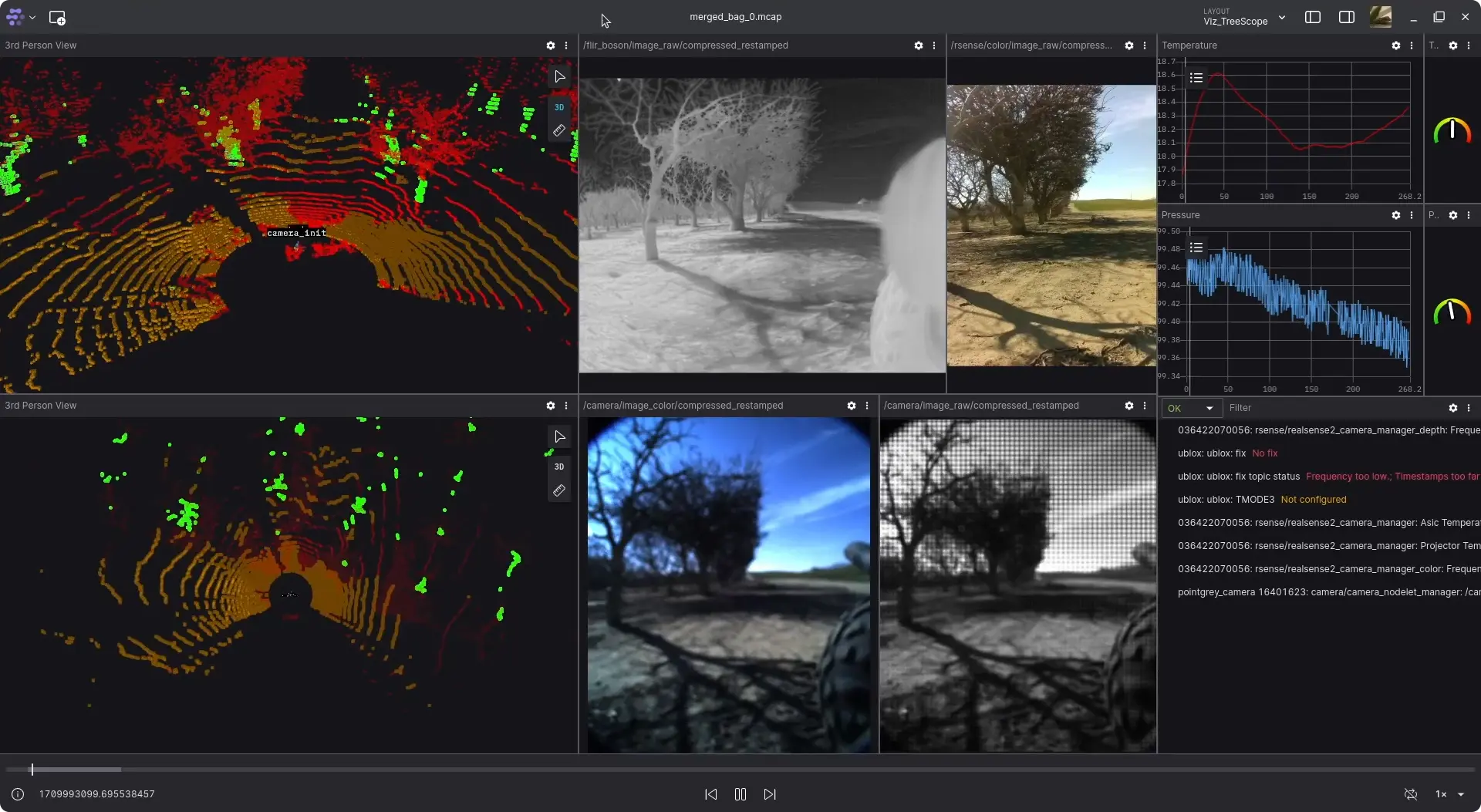

But until recently, there was a lack of quality tooling to offload, analyze, manage, visualize, and understand the huge amounts of data produced by robots. Robotics data is multimodal and has complex requirements that aren’t found in traditional data tooling, such as complex serialization, asynchronous streams from multiple devices, and a wide range of data types (including LiDAR, camera, point cloud, and thermal readings, time series, and operational logs).

Developing reliable, quality tools for these requirements is complex, and many organizations have reverted to hacking together their own solutions in house, wasting precious engineering resources. In contrast, developers at SaaS companies have long had out-of-the-box tools at their fingertips that allow them to manage auth layers, authentication, observability, and delivery, without building their own custom tools.

In robotics, the transition from an initial, conceptual sketch to a commercially-viable platform is a massive feat involving several teams and requires purpose-built observability tooling — a class of software that is still in its infancy. In this article, we’ll be walking through the stages of commercial robotics development and how new, off-the-shelf tooling options are making data management, processing, and observability easier and more accessible for teams that lack the resources to build complex systems in house.

From Idea to Prototype

Like anything else, building a robot begins with an idea and a goal — perhaps automating repetitive tasks, enhancing existing capabilities, or increasing precision beyond what’s imaginable from a human. This idea then undergoes design and simulation stages, where its feasibility is rigorously tested against the unforgiving laws of physics and engineering. Transitioning from digital blueprints to tangible prototypes is a pivotal phase entailing a careful selection of materials, electronic components, and software architectures, and is what allows roboticists to materialize their ideas into entities capable of interacting with the physical world.

This stage involves collaboration and iteration between team members with highly diverse and specialized skills, including mechanical engineering, electronics, computer science, and AI/ML. Together, this group navigates the complexities of integrating diverse hardware with sophisticated software, ensuring seamless communication and functionality.

Because the goal of this phase is understanding the feasibility of the idea, rather than making it commercially viable, robotics teams have the freedom to work without the constraint of having to build a beautiful, smoothly-functioning product — at least for now. Parts are bolted together, software updated on the fly, and planning and predictability go out the window. Each iteration brings the prototype closer to its intended design, but also uncovers new challenges.

Scaling a Prototype for Commercialization

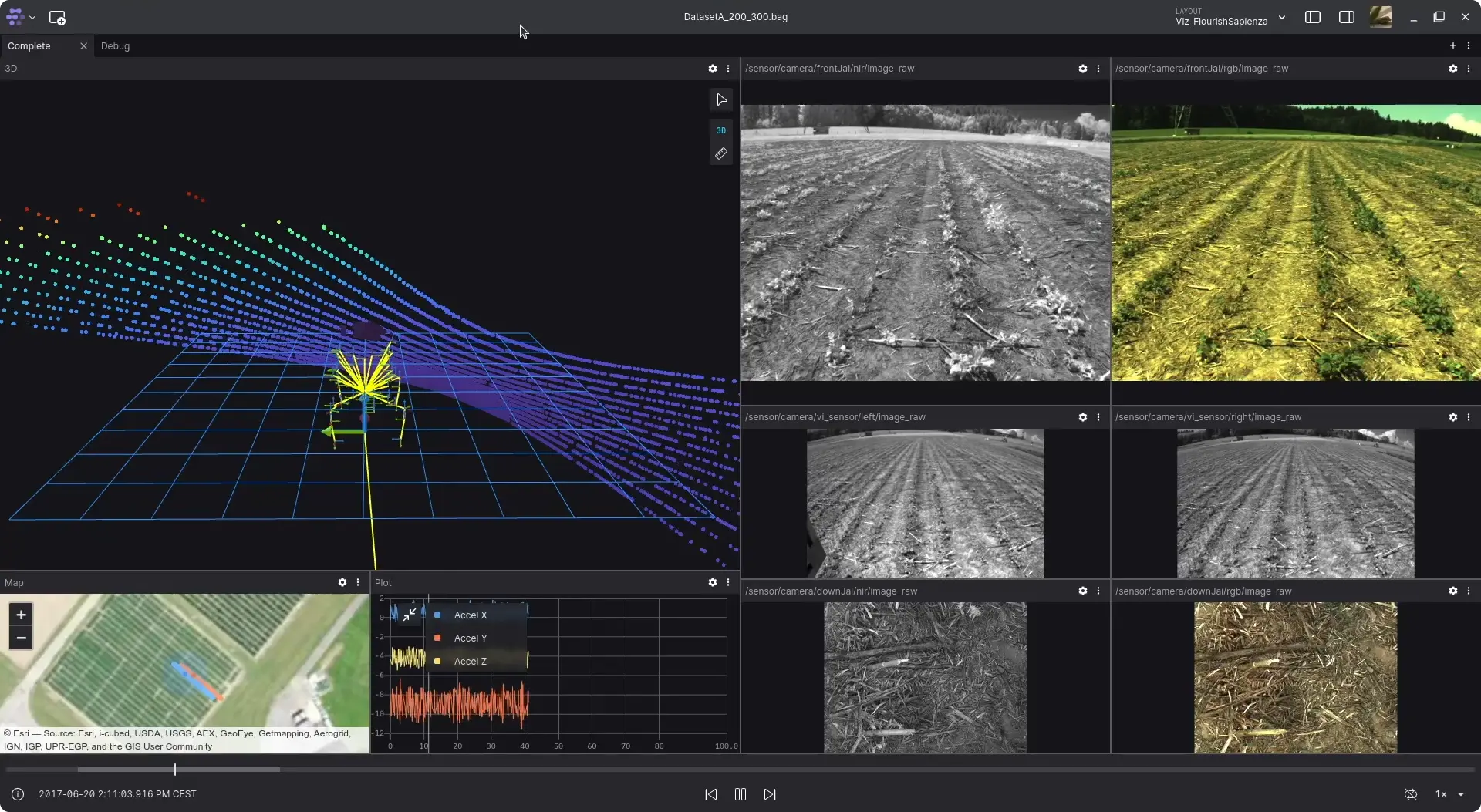

Building a working, cleanly-designed prototype is only the beginning. For most robotics companies, the bigger feat is transforming a working prototype into a commercially viable product. This journey demands an exhaustive analysis of the prototype’s performance across myriad conditions for enhancements and refinements, and involves a rigorous data collection and analysis process, encompassing everything from sensor accuracy to operational efficiency to the robot’s perceived environment to real-time performance metrics, all under varying scenarios.

The goal of these highly-complex analyses is to comprehend what the robot was sensing at any given point in time, enabling roboticists to understand what it was thinking and then acting. These insights allow builders to fine-tune their creations, optimize algorithms and make informed decisions on hardware modifications and software updates, ensuring the robot's functionality aligns with real-world environments.

Navigating the Multimodal Maze

At any given phase of the robotics development process, roboticists have to be able to understand how their machines are sensing (receiving inputs from their external environments), thinking (processing those inputs), and acting (making use of that processed data) — and those inputs are coming from multiple sources, including multiple video compression standards based on block-oriented, motion-compensated coding.

Both live and recorded streams of data must be queryable and sewn together to visualize what the robot perceived and how it performed, under varying environmental — or unknown — conditions. Roboticists need to be able to visualize and manage multimodal robotic data in a comprehensive ecosystem designed to tackle the very specific challenges of robotic development:

-

The data model must be a time-aware system based on the principle of composition over inheritance, meaning that every entity is defined not by a type hierarchy, but by the components that are associated with it. This offers a structure that's both flexible and powerful, and mirrors the dynamic operations of robots, providing users with an intuitive way to model, manage, and interpret complex data.

-

The data layer and all the complexity must be simplified to address the convoluted issues of data serialization, transportation, and processing, whether dealing with asynchronous data streams from multiple diverse sources (like camera images, LiDAR sensors, point clouds, thermal readings, times series telemetry, operational logs, etc.) or indexing across various timestamps, including receive_time and log_time.

-

The data semantics has to understand spatial relationships due to the inherent spatial nature of robotics. Advanced data semantics will effortlessly map spatial data, leveraging projections and transform hierarchies. This is pivotal for developing robots that navigate and understand their environments with precision.

-

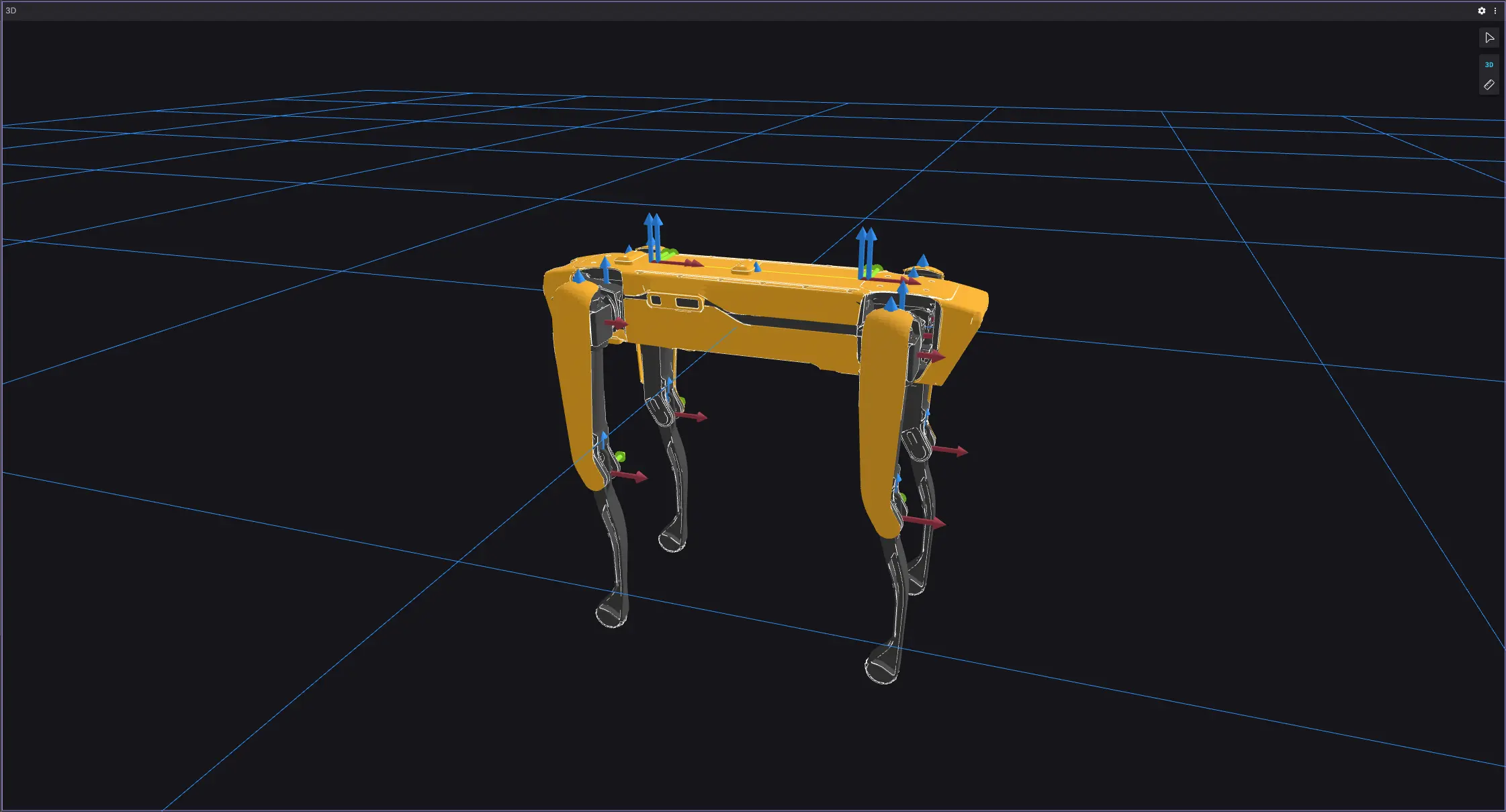

The visualization engine, which is at the core of accelerating development and scaling commercialization, must be capable of rendering complex 2D and 3D data. A state-of-the-art visualization engine provides the tools to interactively explore live and historical data, offering insights that are critical for optimization, triage, debugging and testing new autonomous models and updates.

Transforming Robotic Development

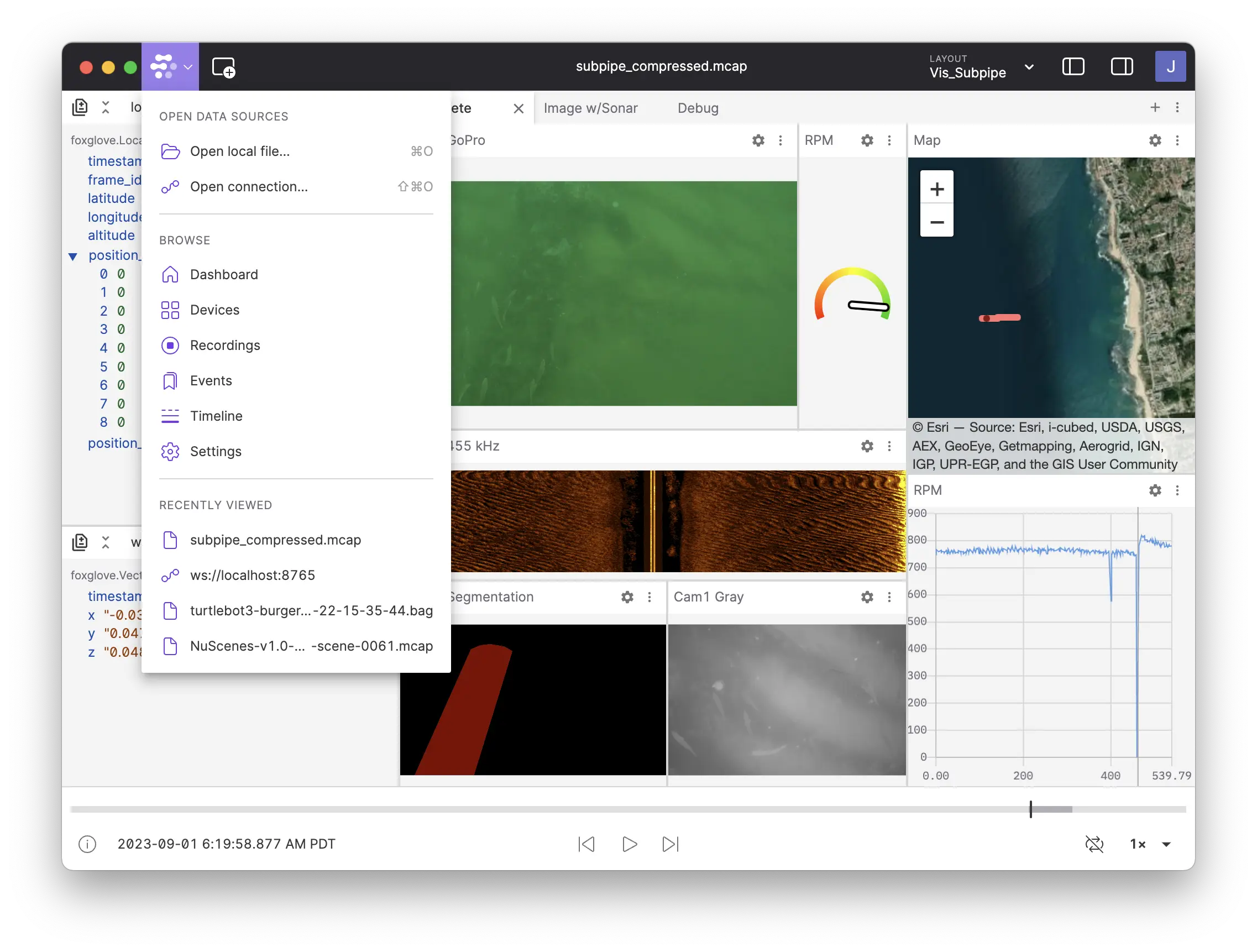

Robotic development, by its nature, is a complex amalgamation of science, engineering, and art. The journey from an initial idea to a commercially successful product is rife with challenges that test the limits of human ingenuity and technological capability. With multimodal data visualization and management platforms, like Foxglove, these challenges become opportunities for growth and innovation.

Foxglove allows robotics developers to glean quick, thorough, and accurate information from their robotics data, bringing the industry to the level of advanced tooling already found in software development. Robotics development is not without its challenges — but Foxglove plays a critical role in helping robotics teams side-step some of the most cumbersome obstacles to pushing the boundaries of robotics and unlocking the full potential of fleets. Give it a try today — free.

Read more:

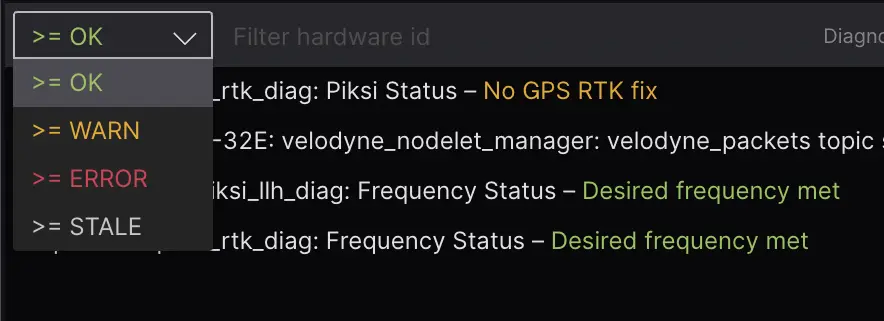

How Foxglove's Diagnostics Panel can improve fleet uptime

José L. MillánJosé L. Millán

José L. MillánJosé L. MillánSetting a new standard for robotics observability

Adrian MacneilAdrian Macneil

Adrian MacneilAdrian MacneilGet blog posts sent directly to your inbox.

Kit Wetzler ·

Kit Wetzler ·